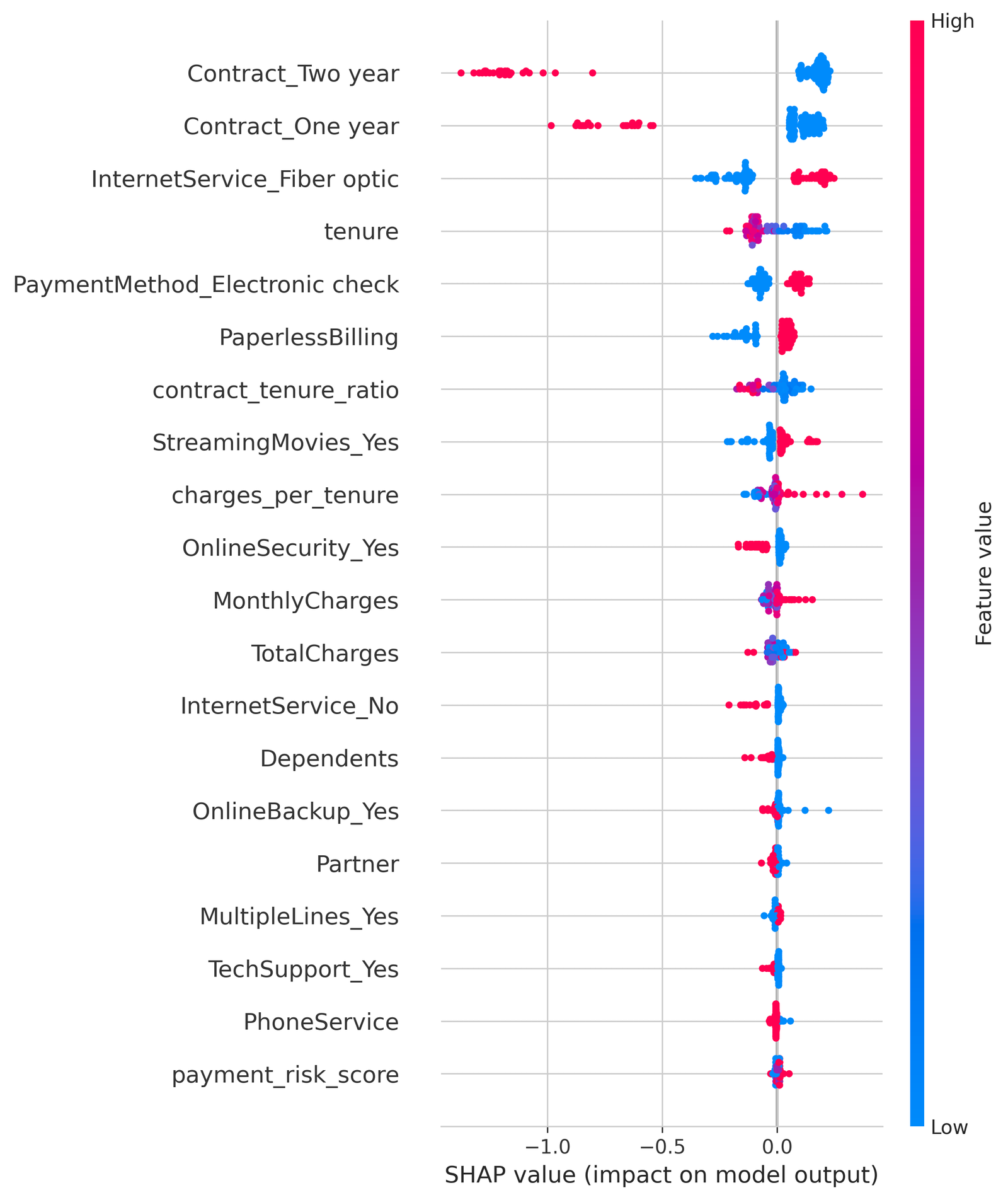

Feature Importance & SHAP Analysis

Understanding what drives churn predictions using SHAP (SHapley Additive exPlanations)

Global Feature Importance (SHAP Values)

Contract Type

Two-year contracts reduce churn to 3% vs 42% for month-to-month. This is the single strongest predictor.

Internet Service

Fiber optic users churn more, possibly due to higher expectations and pricing. No internet = lowest churn.

Customer Tenure

New customers (<12 months) churn at 48%. After 4+ years, churn drops to 8%. Early engagement is critical.

Payment Method

Electronic check users churn at 45%. Automatic payments correlate with lower churn (15%).

SHAP Summary Plot

Each dot represents a customer. Color indicates feature value (red = high, blue = low). Position shows impact on churn prediction.